今年的戛纳国际创意节上,由谷歌DeepMind开发的人工智能程序摘得了创新狮子全场大奖。

5500多年以前,中国人发明了围棋,围棋是古代文人四友之一。围棋比象棋要复杂得多,其步数也没有任何上限,正因如此,计算机几乎不可能掌握围棋技艺。然而,阿尔法狗围棋程序的天才之处就在于它可以像人类一样,通过直觉力来下棋。

An AI program developed by Google DeepMind that has mastered the ancient game of Go won this year’s Innovation Lions Grand Prix in Cannes.

Go is a game invented in China over 5500 years ago, formerly regarded as one of the four cultivated arts of the scholar gentleman. It is considerably more complex than chess, possessing more possible moves than the total number of atoms in the visible universe, which has made it so far impossible for any computer to master. AlphaGo’s genius lies in its ability to play using something like intuition, just as a human player would.

阿尔法狗,由谷歌DeepMind研发|AlphaGo, developed by Google DeepMind

阿尔法狗,由谷歌DeepMind研发|AlphaGo, developed by Google DeepMind

创新狮子评审会主席Emad Tahtouh总结说道,“从任何角度衡量 — 不论是复杂性,简洁性,或者创新性,它的潜在应用或者是现在的用途,还有它的成功之处- 阿尔法狗都令人无法置信。它包含了我们要寻找的创新的所有方面。在全世界很多其他领域里,其潜力都巨大无穷。”

Innovation Lions jury president Emad Tahtouh summarised, “AlphaGo, by any measure – whether you’re looking at complexity or simplicity or innovation or its potential use, its current use, its success – is incredible. It encapsulates everything we’re looking for in innovation. Its potential throughout the world and in so many other avenues is incredible.”

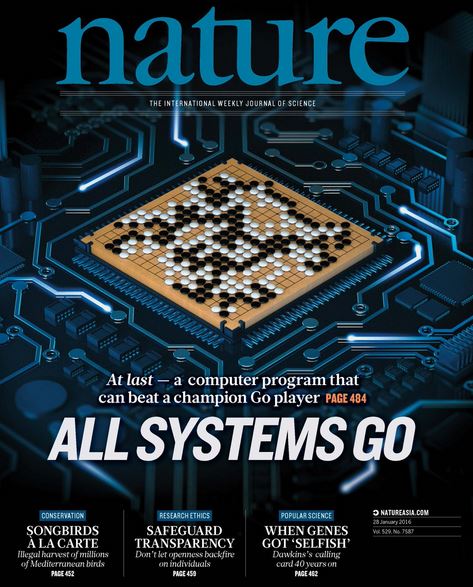

阿尔法狗登上《自然》杂志首页|AlphaGo on front cover of Nature magazine.

阿尔法狗登上《自然》杂志首页|AlphaGo on front cover of Nature magazine.

今年三月份直播的五局围棋比赛,阿尔法狗战胜世界最强棋手李世石,赢得了100万美元奖金它的强大能力尽显无疑。李世石1比4不敌阿尔法狗,而他唯一获胜的一局,是因为他采用了出其不意不按逻辑出牌的方法,以智取胜,这也被人称为“这一手笔,成为围棋史上最有名的一场比赛”。

AlphaGo’s capabilities were most clearly demonstrated when it defeated one of the worlds greatest players, Lee Sedol, in a five-game match broadcast live in March this year, for a $1 million prize. Lee Sedol lost the series 1-4, though it is worth noting that he won one of the matches by pulling off a completely unexpected and seemingly illogical move, which outfoxed AlphaGo and has since been described as “a masterpiece [that] will almost certainly become a famous game in the history of Go”.

3月10日韩国首尔,阿尔法狗对弈李圣石,全球顶尖围棋选手|Seoul, South Korea, March 10th. AlphaGo vs. Lee Sedol, one of the greatest players in the world.

3月10日韩国首尔,阿尔法狗对弈李圣石,全球顶尖围棋选手|Seoul, South Korea, March 10th. AlphaGo vs. Lee Sedol, one of the greatest players in the world.

谷歌DeepMind是谷歌专门研究人工智能下属子公司。戛纳国际创意节创新狮子颁奖活动之后,SHP+采访了谷歌DeepMind研究部门负责人Thore Graepel了解详情,他也是伦敦大学学院计算机科学系的教授。

Google DeepMind is a subsidiary of Google that researches into artificial intelligence. Following the Innovation Lions pitch session at Cannes Lions, SHP+ caught up with Thore Graepel, Research Lead at Google DeepMind and Professor of Computer Science at University College London (UCL) to go a little deeper.

SHP+:阿尔法狗项目的起源是什么?

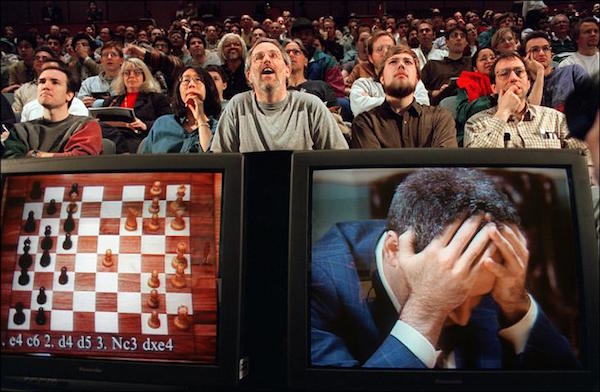

Thore Graepel:1996年Deep Blue在象棋比赛上赢了Garry Kasparov之后,我们就想着要去对付更为复杂得多的围棋,挑战巨大。而当时,计算机很不擅长围棋,因为围棋极其复杂,人类在下棋时会运用自己的感知还有直觉和创造力。而我们的挑战就是能否复制人类的智慧?能不能开发出计算机程序,可以将人类在下棋时的逻辑推理和直觉力结合起来,只去看可以让你获胜的步数,来正确判断整体格局。所以我们利用了神经网络。

SHP+: What was the genesis of the AlphaGo project?

Thore Graepel: Ever since Deep Blue beat Garry Kasparov at chess (1996) it was this big remaining grand challenge to tackle a much more complex game. Computers at that point were very poor at [Go, because it] is incredibly complex and humans apply a lot of perception and intuition and creativity in the face of that. The challenge for us was, can we replicate that? Can we create a computer program that combines the reasoning you use when you play a game with the intuition of really only looking at promising moves and judging the overall position correctly. We then used a neural network approach to tackle that.

1996年,“深蓝”击败世界象棋大师Garry Kasparov|1996, Deep Blue defeated Garry Kasparov, world chess Grandmaster

1996年,“深蓝”击败世界象棋大师Garry Kasparov|1996, Deep Blue defeated Garry Kasparov, world chess Grandmaster

SHP+:这个项目的时间安排是怎样的?

Thore Graepel:最开始这只是一个实习生项目。但当我们清楚了神经网络很擅长下围棋时,我们就加大投入,增加了很多人手。项目为期两年,第一年就是早期的实习阶段,第二年有10到20人参与。

SHP+: What was the timeline of the project?

Thore Graepel: The project started out as an internship project. Once it was clear that these neural-networks were very good at playing Go, we doubled down on the project and added many more people. It was a two-year project; the first was this early internship phase and the second year we had anywhere between ten and twenty people working on it.

SHP+:第一次让阿尔法狗和人类下象棋的测试是什么时候?

Thore Graepel:我们需要跟人类下棋,来证明机器可以打败专业围棋棋手。所以我们让樊麾(华裔法国人,三次欧洲围棋冠军得主)跟阿尔法狗较量,最终阿尔法狗5比0获胜……这对我们来说是大好的消息,因为在此之前我们只是让阿尔法狗跟其他围棋编程交过手。比赛结果让樊麾有些崩溃,但是他觉得这样的交锋也可以让他学到一些东西。

李圣石,职业围棋九段选手|Lee Sedol, professional Go player of 9 dan rank

李圣石,职业围棋九段选手|Lee Sedol, professional Go player of 9 dan rank

SHP+: When did you first test AlphaGo against a human player?

Thore Graepel: We needed a human evaluation…to show that we could actually beat a professional Go player. We asked Fan Hui (Chinese-born French, three time Go European champion) and AlphaGo beat him 5-0…which was great news for us because we had only evaluated AlphaGo against other Go programs up until that point. Fan Hui was a bit devastated, but he came around and grasped that this is something great that the game of Go can really benefit from.

SHP+:李世石跟阿尔法狗下完棋后曾表示,“作为一名职业围棋手,我再也不想进行这样的比赛了。”那么全世界围棋界对阿尔法狗怎么看呢?

Thore Graepel:人们的反映还是挺复杂的。一开始很多人没料到阿尔法狗能打败李世石。但是当阿尔法狗真的赢了一局的时候,这些人不得不去接受这样的现实。之后,比赛后期,人们变得很狂热。并且还给阿尔法狗颁发了九段证书(最高级别),人们也真正开始接受这样的想法,知道他们可以从阿尔法狗身上学到一些东西。一如当初Deep Blue并没有对象棋产生任何消极影响一样,我觉得阿尔法狗的存在也不会对围棋产生任何负面影响。

SHP+: After his match with AlphaGo, Lee Sedol said, “As a professional Go player, I never want to play this kind of match again.” How does the worldwide Go community feel about AlphaGo?

Thore Graepel: They have mixed feelings about it. A lot of people at the beginning didn’t think that AlphaGo would be able to beat Lee Sedol. Then, when it started winning, they had to adapt to the idea that it might be possible. Then, toward the end, people were so enthusiastic about it. They gave Alpha Go the 9 dan (top level) pro certificate and they really started to embrace the idea [and] that they might learn a lot from Alpha Go. Just as Deep Blue hasn’t had any negative effect on chess, I think the existence of Alpha Go has had no bad effect on Go.

一台雅达利2600家用游戏机|An Atari 2600 Console

一台雅达利2600家用游戏机|An Atari 2600 Console

SHP+:为什么游戏是很好的开发人工智能的工具呢?

Thore Graepel: 这要追溯到《自然》杂志有关DeepMind的第一次报道,记录了从雅达利2600家用游戏机中找出的50个街机游戏,并且培训了一个单一特工,单一的神经网络,去打每一个游戏……每一个游戏都像是一个小宇宙一样。比如说“吃豆人”,有迷宫,有空间导航,有人追你,你要逃跑,得吃豆子。这是一个非常丰富的小世界。显然,人工智能学会了如何打游戏,这就意味着它对这个游戏世界有了一定的了解。而现在是50个这样的世界,所有这些游戏加在一起,涵盖了认知的各个不同的方面,这样的测试非常有意思。

这就是我们技术的基础。我们寻找这样的游戏……可以捕捉到真实之世界的某个方面,之后我们就去训练人工智能特工。如果人工智能特工不能应付的话,我们就会开发新的东西。

SHP+: Why are games a good tool for developing AI?

Thore Graepel:It goes back to the first Nature (science journal) paper that came out with DeepMind which was the idea of taking 50 arcade games from the Atari 2600 console and training a single agent, a single neural network, to be able to play each one of these games…Every single game is…like a microcosm of the world. Think of Pacman; there’s a maze, there’s spatial navigation, things pursue you, you have to escape, you have to eat pills. It’s a very rich little world. Clearly AI learning to play that game would mean it had understood something about the world. Now take that times 50, where all of these games cover different aspects of cognition and you have a really interesting test.

That’s the foundation of what we do. We look for these games…that capture an aspect of the real world and then we train AI agents to cope with those. Or if they can’t, then we invent something new.

《吃豆人》游戏|The game Pacman

《吃豆人》游戏|The game Pacman

SHP+:未来我们可以怎样利用这项技术呢?

Thore Graepel:美妙之处就在于我们其实是用了非常通用的一些方法来解决问题,将学习和推理的想法结合起来。就比如说,可以试想一下医疗或者研究领域,这项技术可以帮助人们做出正确的决定,人工智能可以阅读更多的医学研究文献,这样就可以更好地建议人们下一步该怎么做,或许还可以提前更好地做出规划,帮助到研究人员或者医生。

SHP+: What can be done with tech in future?

Thore Graepel: The beautiful thing is that the way we solved the problem is very general, it combines these ideas of learning and reasoning. So for example, you can think of applications in healthcare or research where people who can be assisted to make the right choices, where the AI can read much more of the medical research literature, and therefore give better hints as to what the next step is, and maybe plan better ahead, to assist researchers or doctors.

BAM Music Library Brings Edgy, Indie Sounds to Chinese Creatives

BAM Music Library Brings Edgy, Indie Sounds to Chinese Creatives